Among the many new updates from OpenAI, the tech company behind ChatGPT has announced the rollout of its new advanced voice mode feature, allowing users to have natural conversations with its chatbot.

The company said it’s not yet available in countries in the European Union including Iceland, Liechtenstein, Norway, Switzerland or the United Kingdom.

OpenAI’s co-founder and CEO, Sam Altman, wrote in a post on X: “Hope you think it was worth the wait.”

advanced voice mode rollout starts today! (will be completed over the course of the week)

hope you think it was worth the wait

— Sam Altman (@sama) September 24, 2024

Here’s what you need to know about it and how to turn advanced voice mode on in ChatGPT.

What is advanced voice mode on ChatGPT?

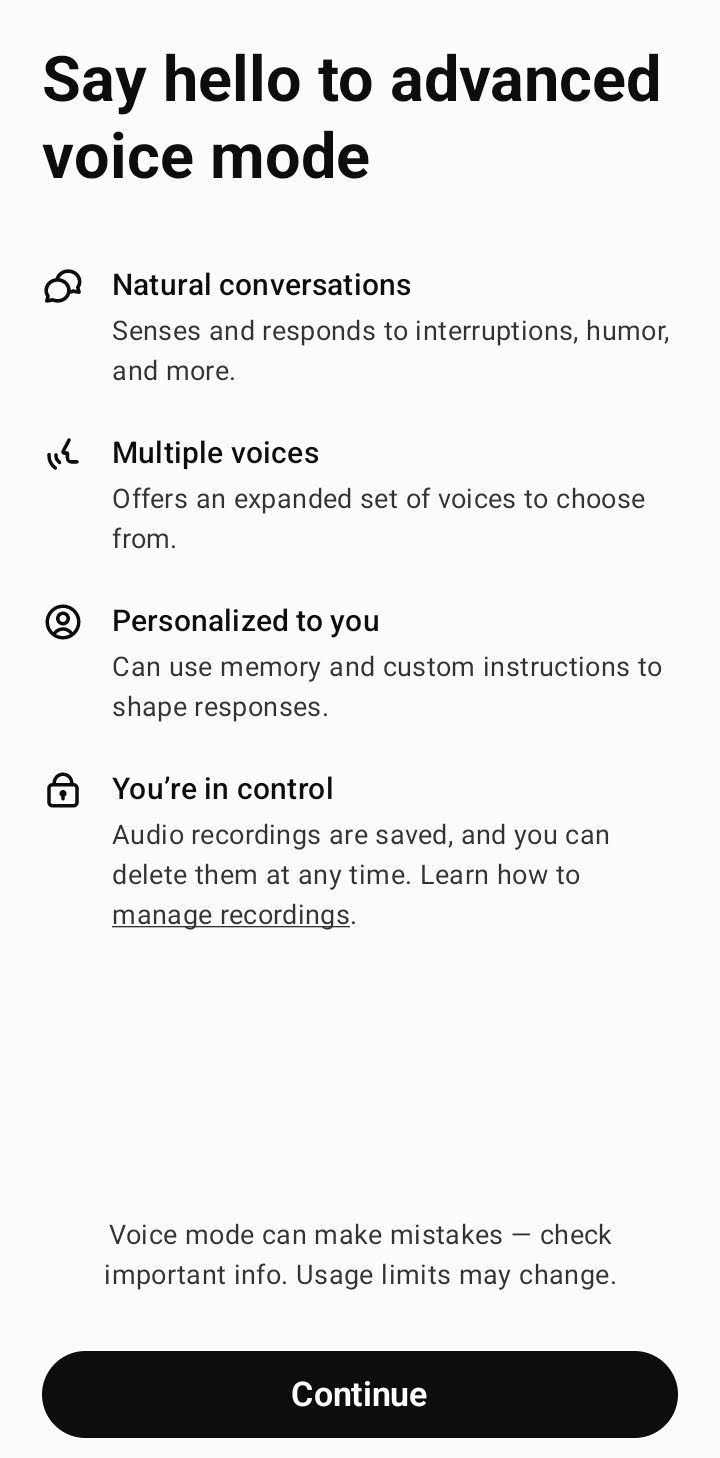

Voice conversations allow users to have a spoken conversation with ChatGPT, which means you can have more natural interactions and conversations. When you can ask questions or have discussions through voice input, ChatGPT will give a spoken response.

There are currently two types of voice conversations – standard and advanced.

Advanced Voice is rolling out to all Plus and Team users in the ChatGPT app over the course of the week.

While you’ve been patiently waiting, we’ve added Custom Instructions, Memory, five new voices, and improved accents.

It can also say “Sorry I’m late” in over 50 languages. pic.twitter.com/APOqqhXtDg

— OpenAI (@OpenAI) September 24, 2024

ReadWrite reported on OpenAI launching its new standard voice mode last month. Standard voice uses several large language models (LLMs) to generate its response, including transcribing what you say into text before sending it to OpenAI’s models for response. While standard voice is not generally multimodal like advanced voice, standard voice conversations also use GPT-4o alongside GPT-4o mini. Each prompt in standard voice counts towards your message limits.

Where the advanced mode differs is that it uses GPT-4o’s native audio capabilities and features. As a result, OpenAI hopes to produce more natural, real-time conversations that pick up on non-verbal cues, such as the speed the user is talking and can respond with emotion.

However, usage of advanced voice by Plus and Team users is limited daily.

How to activate voice mode in ChatGPT?

In July, OpenAI introduced an audio-only Advanced Voice Mode to a small group of ChatGPT Plus users, with plans to expand it to all subscribers this fall.

While screen and video sharing were part of the initial demo, they are currently not available in this alpha release, and OpenAI has not provided a timeline for their inclusion.

Plus subscribers will receive an email notification when the feature is available to them. Once activated, users can toggle between Standard and Advanced Voice Modes at the top of the app when using ChatGPT’s voice feature.

To start a voice conversation, tap the Voice icon in the bottom-right corner of your screen.

If you’re using advanced voice, you’ll see a blue orb in the center of the screen when the conversation begins. For standard voice, the orb will be black instead.

During the conversation, you can mute or unmute yourself by tapping the microphone icon at the bottom left. And when you’re ready to end the chat, just hit the exit icon in the bottom right.

If it’s your first time starting a voice chat, or the first time using advanced voice, you’ll be asked to pick a voice. Just a heads-up, the volume in the selector might be a bit different from what you hear in the conversation.

You can always change your voice in the settings later, and advanced voice users can even adjust their voice directly from the conversation screen using the customization menu in the top-right.

Make sure you’ve given the ChatGPT app permission to use your microphone so everything works smoothly.

And if this feature isn’t available for you yet, you’ll see a headphones icon instead of the mute/unmute buttons. With both versions, you can interrupt the conversation, steering it in a way that feels more appropriate for you.

Is ChatGPT voice available?

If you’re signed in to ChatGPT through the iOS, macOS, or Android apps, you already have access to the standard voice feature. However, advanced voice is currently only available to Plus and Team users.

There’s a daily limit for using advanced voice, which might change over time, but you’ll get a heads-up when you’re close to the limit—starting with a 15-minute warning. Once you hit the limit, your conversation will switch to standard voice automatically.

The advanced voice doesn’t support things like images yet, so users can only continue an advanced voice conversation with text or standard voice, not vice versa. Conversations started in standard voice can always be resumed using standard voice or text, but not advanced voice. Advanced voice isn’t available with GPTs either—you’ll have to switch to standard voice for that.

OpenAI hasn’t introduced certain accessible features either. Consequently, subtitles aren’t available during voice conversations, but the transcription will appear in your text chat afterward. Also, you can only have one voice chat at a time.

Advanced voice can create and access memories as well as custom instructions, just like standard voice, which also has these features.

Is ChatGPT voice chat safe?

In August, OpenAI revealed there were some security flaws with ChatGPT’s voice mode, but reassured that they were on top of it. OpenAI published a report on GPT-4o’s safety features, addressing known issues that occur when using the model.

The “safety challenges” with ChatGPT’s voice mode include typical concerns like generating inappropriate responses, such as erotic or violent content and making biased assumptions. OpenAI has trained the model to block such outputs, but the report highlights that nonverbal sounds, like erotic moans, violent screams, and gunshots, aren’t fully filtered. This means prompts involving these sensitive sounds might still trigger responses.

Another challenge is communicating with the model vocally. Testers found that GPT-4o could be tricked into copying someone’s voice or accidentally sounding like the user. To prevent this, OpenAI only allows pre-approved voices – not including a Scarlett Johansson-like voice, which the company has already removed. In addition, while GPT-4o can recognize other voices, it has been trained to reject such requests for privacy reasons, unless it’s identifying a famous quote.

Red-teamers also flagged that GPT-4o could be manipulated to speak persuasively, which poses a bigger risk when spreading misinformation or conspiracy theories, given the impact of spoken words. The model has been trained to refuse requests for copyrighted content and has extra filters to block music. And as a fun fact, it’s programmed not to sing at all. However, in this example from a user on X, the voice helps them to tune his guitar by humming the note.

Advanced Voice in ChatGPT tunes my guitar. pic.twitter.com/1H6mYZTCq7

— Pietro Schirano (@skirano) September 24, 2024

How can I stop sharing audio?

You can stop sharing your audio anytime by going to the data controls page in your ChatGPT settings. Just toggle off the “Improve voice for everyone” setting.

If you don’t see “Improve voice for everyone” in your Data Controls settings, that means you haven’t shared your audio with OpenAI, and it’s not being used to train models.

If you choose to stop sharing, audio from future voice chats won’t be used for model training. However, audio clips that were previously disassociated from your account may still be used to train OpenAI’s models.

OpenAI also mentioned that even if you stop sharing audio, it “may still use transcriptions of those chats to train our model” if the “Improve the model for everyone” setting is still on. To fully opt-out, disable “Improve the model for everyone.”

Audio clips from your advanced voice chats will be stored as long as the chat remains in your chat history. If you delete the chat, the audio clips will also be deleted within 30 days, unless they’re needed for security or legal reasons. If you’ve shared your audio clips with OpenAI to help train models, those clips may still be used, but only after they’ve been disassociated from your account.

Featured image: Ideogram / Canva

The post How to turn on advanced voice mode in ChatGPT – a guide to new AI feature appeared first on ReadWrite.